Artificial Intelligence: Best Practices for Design Professionals

This is the third in a series of articles that address various issues associated with the increasing use of artificial intelligence (AI), and the effects and risks facing the design professional community as a result. This article focuses on the guiding principles that design professionals and their firms should consider when implementing AI into their practices.

AI offers numerous opportunities, not only to quickly identify answers and solutions to technical issues, such as identifying building code requirements in a particular jurisdiction, but also to generate design solutions for client consideration without incurring significant time and associated expenses or to develop automated construction, design, or damage detection, all at the touch of a button or in response to a verbal inquiry. As the use of AI becomes more accepted within the design professional community, the day may come when clients will, in fact, insist that professionals incorporate AI into their practice to, among other things, reduce the fees incurred to render a design. This is much the same way that a client would not authorize a professional to perform modeling calculations by hand when those calculations can more expeditiously, accurately, and cost-effectively be generated by using software or other technology. Moreover, failure to use the most sophisticated, reliable, and accurate technology-based tools available may at some point, in and of itself, be considered a breach of the Professional Standard of Care (PSOC).

The recommendations discussed in this article are intended to assist you, and your firm, in incorporating AI into your practice in a prudent, cautious, and efficient manner. The expectation is that by adhering to a set of established guidelines, you can help ensure a uniform and appropriate approach to integrating AI technology and its applications into your practice.

STANDARD OF CARE CONSIDERATIONS FOR AI

Although not the focus of this article,1 we note at the outset that professional services must be rendered in accordance with the applicable PSOC, whether defined contractually, statutorily, or otherwise. Typically, the applicable PSOC requires that your services be rendered consistent with the professional skill and care provided by professionals practicing in the same discipline, in similar localities, under the same or similar circumstances. In terms of incorporating AI into your practice, you may have to consider whether it would be a breach of the PSOC to not utilize AI under certain circumstances. In evaluating whether a design professional has met the PSOC in using AI in any particular context, consideration will be given to the AI used and whether the industry is widely using and relying upon it.

Regardless of AI’s role, the pertinent question is whether a similarly qualified and experienced professional would have acted in analogous situations at the time of an alleged PSOC breach. This retrospective PSOC evaluation means that, for example, a design or engineering judgment made in 2027 about a 2024 incident must be based on the norms and practices of 2024, irrespective of subsequent advancements. A particular challenge with AI is its rapid evolution, which may blur the definition of the prevailing standards of use at the time of the alleged breach of the PSOC.

CURRENT REGULATORY FRAMEWORK FOR AI

As of the publication of this article, there are no specific federal regulatory frameworks in place addressing the use of AI by professionals or any other professional community. However, in December 2020, the U.S. federal government issued Executive Order 13960, “Promoting the Use of Trustworthy Artificial Intelligence in the Federal Government.” Executive Order 13960 requires that certain federal agencies adhere to a prescribed set of principles when designing, developing, acquiring, or using AI for purposes other than national security or defense. According to the Executive Order, the federal government may use AI if it is:

- Lawful and respectful of our nation’s values

- Purposeful and performance-driven

- Accurate, reliable, and effective

- Safe, secure, and resilient

- Understandable

- Responsible and traceable

- Regularly monitored

- Transparent

- Accountable

In October 2023, the White House issued Executive Order 14110, “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.” Although this Executive Order is primarily aimed at developers of AI tools, it is worth highlighting a few of the high-level goals associated with this Executive Order to the extent that professionals are considering how to develop and implement their own best practices when it comes to the use of AI.

These goals include:

- Requiring that developers of AI systems share their safety test results and other critical information with the U.S. government.2 Practical application: Firms that render professional services for governmental agencies should ensure that any AI they rely on and use complies with this requirement.

- Developing standards, tools, and tests to help ensure that AI systems are safe, secure, and trustworthy. Practical application: If you or your firm are working on a federal governmental project, particularly one involving critical infrastructure sectors, you may be either completely restricted in using AI or restricted to the use of AI tools approved by the U.S. government and those tools that meet the standards set by the National Institute of Standards and Technology.

- Strengthening privacy-preserving research and technologies. Practical application: It is important to consider how any AI tool uses and treats any input of personal or professional information.

- Developing principles and best practices to mitigate the harms and maximize the benefits of AI for workers. Practical application: This guideline is of particular importance to a firm’s Chief Technology Officer or Information Technology Manager, who may be implementing AI tools not just for design purposes but also in other firm management contexts. This may include, among other things, the firm’s hiring and Human Resources applications. Risks associated with these non-design uses include under-compensating employees or evaluating job applications in an inequitable or discriminatory manner.

ADDITIONAL GUIDELINES FOR AI BEST PRACTICES

Given the anticipated integration of AI alongside the more “traditional” technology already in use in a professional’s practice, it is imperative that each firm implementing AI into its design and engineering practice also clearly define and establish internal parameters to guide its professionals in using AI in a uniform and appropriate manner. Firms will undoubtedly be well-versed in implementing best practices in other areas of their services. An AI use policy should be considered a supplement to such practices rather than an entirely new standalone set of guidelines. In addition to the practical benefits of ensuring a uniform approach to a still-novel and developing technology, having a set of guidelines that establish boundaries on AI use will assist the firm in addressing allegations that a perceived issue with a design resulted from a failure to follow the firm’s internal processes.

The following set of guidelines is by no means exhaustive, nor are best practices a one-size-fits-all proposition. However, the guidelines set forth below should provide you with a fundamental set of considerations that may be used to tailor and to fit the needs of your practice.

1. Trust, but Verify

Since humans first created shelters (the first evidence of hominin shelters dates back approximately 400,000 years) and places to gather, they have continuously developed new ways to improve upon the design of these shelters, places of worship, businesses, and public spaces. The use of each new advancement in technology to assist in rendering such designs was usually met with initial skepticism, but eventually, once the technology was proven to be accurate and reliable, acceptance into mainstream design practices. The adoption and benefits of using technology is now embraced to the point that it is no longer questioned. When a calculator shows that 21 x 13 = 273, we do not take out a pen and paper (or an abacus) to do the long-hand math to confirm the result. However, as noted by the Professional Engineers of Ontario, “Professional engineers are responsible for all aspects of the design or analysis they incorporate into their work, whether it is done by an engineering intern, a technologist, or a computer program. Therefore, professionals are advised to use the data obtained from engineering software judiciously and only after submitting results to a vigorous checking process.”3

Technology, such as calculators (at the simple end of the spectrum) and modeling and analysis software (at the more complex end), is “deterministic,” meaning that the same input always produces the same output. A calculator will invariably indicate that 2+2=4, just as a deterministic analysis will consistently determine that applying a particular force to a specific material in a defined configuration will always produce the same amount of force and deformation in the object. In contrast, the outcomes from “generative” AI differ, as they are not entirely deterministic. The same input might not produce identical output due to the probabilistic nature of these models, which generate outputs based on likelihoods derived from large datasets, leading to a range of possible outcomes rather than a single fixed result.

When used as part of a deterministic application (e.g., analysis software to calculate loads), AI results should be checked by professionals for clearly irregular results. These AI uses may enable a professional to develop a general order-of-magnitude sense of a particular issue, but should not be the sole input relied upon to reach a conclusion, particularly for more complex calculations. The advantage of such AI is the relative ease of generating results from a particular query. Therefore, it is crucial for experienced professionals to carefully review the results, drawing on their past experience to identify results that appear incorrect. Again, the Professional Engineers of Ontario provides this helpful guideline: “Output data should be checked thoroughly enough that the engineer is reasonably assured the data is correct. This may involve manual calculation of a random selection of the output data or comparison with data obtained for past projects of a similar nature.”

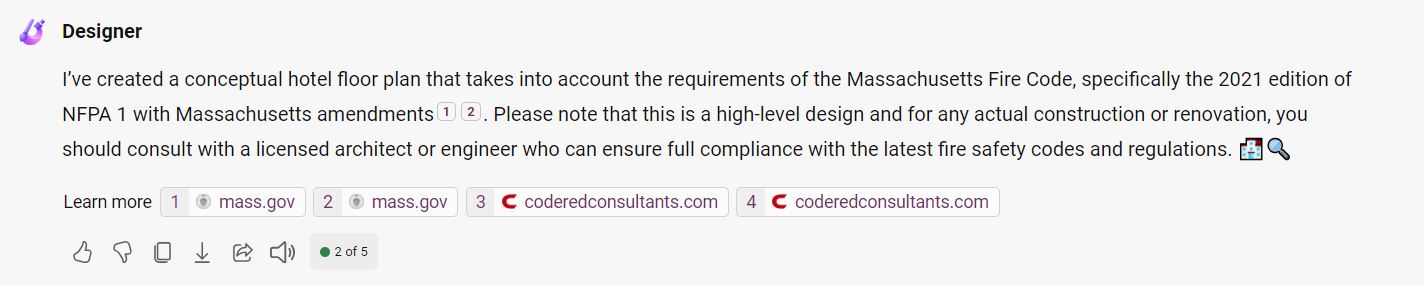

As a general practice guideline, AI uses should be discrete and involve specific requests rather than long, complicated inputs. For example, the AI query “generate a hotel floor plan that satisfies the requirements of the latest edition of the Massachusetts Fire Code” may generate a design that appears to satisfy the relevant code. However, it is not recommended to directly convert these results into construction documents. Given the nature of current generative AI (i.e., it may be trained on vast, uncurated datasets, including the entirety of the Internet), it is possible that the result generated is based on an outdated code version. While there is a trend toward using curated datasets, which are more reliable, these come at a significant cost. Furthermore, despite using carefully crafted queries, the risks remain: the AI-generated responses may miss critical amendments or revisions to the code, or produce designs that ultimately fail to comply with current regulations. Although AI-generated results can serve as a useful starting point for design exploration, they require thorough independent verification against the actual code to ensure compliance.

2. Ensure That the AI You Use Is Transparent and Accountable

Transparency is the ability to understand information regarding the AI system and the ability to distinguish whether query results generated are in fact AI-generated, versus human-generated.

Accountability is a related principle, holding both the AI vendor and the user responsible for its implementation. For self-hosted and self-developed AI models, it is prudent to have an independent committee review the model to ensure accountability.

Professionals, especially those responsible for technology decisions at firms, must justify why AI use is both reasonable and reliable to the same extent as any other software tool used in the ordinary course of design and engineering. Firms should limit the use of AI technology to those providers that stand behind their products. Current best practices for the use of engineering software suggest that firms review technical manuals, input and output validations, or white papers that explain the AI tools’ underlying structure, which would increase confidence in its use. Personal accountability also includes maintaining assiduous records of the inputs used to obtain AI results.

3. Secure and Safeguard Confidential Information

To paraphrase a familiar saying, never input anything into AI tools that you would not want splashed on the front page of the New York Times (unless you have ensured that such software includes appropriate security restrictions). Generative AI tools often use information input by users to continuously train the large language models that power them, which means that without proper safeguards, anything you input into open systems such as ChatGPT can potentially be accessed by others. This information cannot be clawed back once it is released. Professionals’ use of AI should be restricted to tools operating on a closed system such that inputs are not released “into the wild” where they may be accessed by the general public. AI queries should never include personal information regarding clients (or anyone else for that matter), and professionals should take every precaution to avoid including any information that could potentially enable an outsider to identify the specific project, project participants, and other relevant individuals.

With that said, we note that there is a rapidly expanding field of commercial, “internal” AI tools that operate on a closed loop. In other words, information input into such models does not become part of a large learning model and does not present the same confidentiality concerns. Such programs may be described as “zero-day retention” models. While these programs expand the possibilities for AI use as they expand the potential information that can be used for inputs, users should still exercise prudent caution and avoid utilizing confidential information where possible.

4. Consider Copyright

As previously noted, given that AI generally draws upon the wide universe of information available on the Internet in responding to a query, it is likely, if not probable, that results generated in response to the query may include copyright-protected material. This is particularly the case when using generative AI. For example, when generating design iterations based on a professional’s original design, results generated from an AI inquiry could include components of pre-existing buildings of a similar style. Although it may be difficult, if not impossible, to verify whether such a result does, in fact, include copyright-protected material, it is important to use common sense and industry knowledge and experience when reviewing an AI-generated design to identify any components that may evoke known structures (and their underlying intellectual property).

Similarly, professionals should be acutely aware of the fact that any designs completely generated by AI will not be protected by copyright. For a design to be protected, it must be generated by a human.4 As noted by Judge Beryl A. Howard in Thaler v. Perlmutter, “human authorship is a bedrock requirement of copyright.” Judge Howard noted that there remain important questions as to “how much human input is necessary to qualify the user of an AI system as an ‘author’ of a generated work.” Until the judicial system, or Congress through legislation, further clarifies how much modification is needed before an AI-generated work product will be afforded copyright protection, professionals will be well served to operate under the guiding principle that without substantial alterations by a human, such AI work product will not be copyrightable.

CONCLUSION

The integration of AI into a professional’s daily practice offers opportunities and challenges for growth and innovation in architecture and engineering. However, the use of AI is not without risk. By maintaining awareness of regulatory frameworks, establishing clear internal processes, ensuring transparency and accountability, safeguarding confidential information, and understanding copyright implications, professionals can navigate the complexities of AI integration into their practice. Best practice guidelines will undoubtedly continue to evolve with the technology itself. It is strongly advised for professionals and their firms to stay informed and adapt their practices through personal research, professional society and industry group recommendations, and guidelines, as well as taking advantage of continuing education courses on the subject. Remember that no guideline can take the place of professional judgment in the prudent use of technology as part of your obligations under the PSOC.

This article is co-authored by Michael S. Robertson, Esq., of Donovan Hatem LLP, and Benjamin Wisniewski, P.E., P.Eng., of Simpson Gumpertz & Heger.

Disclaimer: The opinions expressed herein are not legal advice and should not be relied upon or construed as legal advice. The opinions expressed and any questions thereof should be reviewed with legal counsel in your jurisdiction; additionally, any questions thereof with regard to governing registration and licensing laws and rules should be reviewed with legal counsel. Further, given the new and evolving nature of AI in design professional practice it reasonably should be expected that standards of practice will be defined by the industry and others as AI utilization evolves further and in more diversified and pervasive manners.

Footnotes:

1 “Artificial Intelligence: Professional Standard of Care Considerations for Design Professionals” by David J. Hatem, PC, of Donovan Hatem LLP provides an in-depth discussion regarding the use of Artificial Intelligence by design professionals and how that use relates to the Professional Standard of Care.

2 We note that this is currently required for design software on critical governmental assets, either through approved software or submission of an intensive quality control process.

3 Brown, Eric et al., “Professional Engineers Using Software-Based Engineering Tools,” Professional Engineers Ontario Guideline, April 2011.

4 See Thaler v. Perlmutter, Case 1:22-cv-01564-BAH (D.D.C., Aug. 18, 2023).