Seven Questions on Artificial Intelligence and Its Use by AEC Design Professionals

Evolving advances in artificial intelligence (AI) pose real and current challenges, risks, and opportunities for the AEC design professional community. In their 2023 study regarding the performance of ChatGPT on the Fundamentals of Engineering exam, Pursnani, Sermet, and Demir observed that ChatGPT using GPT-4 achieved a potentially passing score of 75.37%.¹ We are entering unique times, which prompted us to generate these seven questions on AI and its use by AEC design professionals to help guide you through the advances being made.

1. What developments in AI technology are driving the need for this discussion?

Good first question! Two things: First is the leap in language-based interaction with AI technologies at, or close to, a level of what we consider to be human thought. These technologies now include all forms of data, such as audio and video, combining large computational abilities with human-like results in significantly less time than before.2 The second is the ease of access of these tools by anyone with an internet connection. This upends what we have seen in the past when new cutting-edge technology was often prohibitively expensive and only accessible by the few.

2. What type of engineering or design work is more or less suitable or appropriate for AI?

Now this is where it gets interesting. The standard responses to this inquiry that have been presented in trade journals so far have typically related to increased task automation with the argument continuing in a vein similar to “freeing up humans to do more valuable work.” We consider this a fallacy for two reasons. First, the immense computer power developed over the last ten to fifteen years has already allowed for significant task automation when people have managed to identify it; the limitation on task automation has been human adoption, not the technology. The second reason is because this question presupposes a divide of work type between human and computer (yes, we asked the question that way on purpose). The question should be, “How should we work with AI?” Once we frame the inquiry this way, it becomes more about the individual. Here, the analogy of AI as your assistant (your “copilot,” etc.) becomes relevant as the extent of work AI can and will perform depends on how clearly you can describe it. This is not “What can AI do for you?” but “What can you do with AI?” Technological progress will move at the speed of humans adopting the technology (for now), which is typically the speed humans feel they can trust the technology while they remain in control.

This is not “What can AI do for you?” but “What can you do with AI?”

3. Can AI perform work that is currently subject to the professional engineer sign/stamp process?

In your current work as a licensed engineer, you likely use technology to assist you for almost all your tasks: spellcheck and grammar suggestions in emails and reports, running analysis software to obtain results to size beams, BIM tools to document your work, and maybe even applying a digital stamp. This work by technology is still considered “engineering.” So, to the extent you continue to use technology to assist in your work, there is no real change because, to date, regardless of how much technology you use, you are still making decisions. In addition, there are the complex, human-centered areas of interdisciplinary engineering and construction coordination that require human experience to communicate and direct.

4. But this does seem different, now doesn’t it?

AI-based technologies are making, or providing options for, more decisions and doing so in a way that is not always explicit and understood (the “black box” problem). This is correct; hence, these new discussions we’re all having. But with all the current technological progress, certain engineering aspects have not changed, namely:

- AI technology is not a person; therefore, AI cannot legally assume design professional responsibility. AI presently cannot meet certain ethical obligations for professional engineers. These ethical obligations vary by state, but generally include the obligation to ensure preservation of the health and safety of the public. Since AI is not a person, it currently cannot make a legally recognized assertion that all of the engineering and design work subject to the professional engineer sign/stamp process was prepared by it or under its direct supervision, which is a representation that is made to ensure the health and safety of the public. Moreover, AI is (currently) not sentient, so AI does not have the agency related to ethical responsibility.

- Each project must have a licensed engineer stamp and take responsibility for the design as the engineer of record (EOR). As technology progresses, we will see AI-based software do more engineering work than before; also, we will see the development of AI-based software that does more of what is currently considered “engineering design” services than before. Given this shift of work and tasks, how does the EOR responsibility remain with the EOR individual or firm? The EOR’s firm must remain responsible for errors or omissions in the EOR signed and stamped design documents. In addition, technology firms that provide software products or services will have rock-solid clickthrough terms of use and licensing agreements that disclaim all liability regarding the output of those products or services, putting the onus on the end-user to verify the output.

5. Are there certain categories of work that should not be performed by AI?

This is a fascinating question, as it directly relates to what makes us uniquely human. As of now, AI can be used for many things and the issue of “should” will depend on the specifics of whatever project or process you are performing. But one aspect is clear: AI is not human. As such, AI is currently precluded from exercising rights and responsibilities bestowed on humans only (e.g., being listed as the inventor on a patent). Wherever wisdom, experience, and intuition are required to forecast and plan for what might change in a poorly constrained system, especially in large group settings, we are seeing the continued need for an experienced human, or groups of humans, to drive the process. However, what it means to be creative or unique is not clearly defined and we expect this will be an area that will need more discussion going forward.

For example, currently, in the United States, AI-generated work is not copyrightable since it does not meet the requirement of being “created by a human being” and would require creative and transformative human input to become copyrightable. Although there is no well-defined way to determine the amount of human input needed to transform AI-generated work to copyrightable work, human input is essential to the final work product of design professionals, since many contracts for design professional services stipulate that upon payment of the full amount for services performed any interest in design documents such as copyrights are to be assigned to the design-builder or owner.

6. What quality assurance/quality control (QA/QC) procedures should be implemented with AI work product? How much work can be done by AI without human supervision?

We noted above the concerns of the AI system being a “black box.” This concern is a legacy of current computational analysis approaches instituted in commercial and tested software that are prescriptive and defined/derived. What we now have, in many ways, are AI results that are more similar to human responses (after all, we are black boxes ourselves!). With that viewpoint, we recommend that the QA approach to AI-produced work should be similar to checking the work of another human—being conscious of possible bias, blind spots, self-confidence, illogical leaps etc.—and finding ways to sanity test results. However, within the context of QA/QC, humans are at a disadvantage when questioning the choices of AI, since responses from AI lack verbal and non-verbal cues that can inform our analysis of the answers provided by humans.

Looking at this the other way around, a clear issue that we are currently confronting is that humans check work and humans get tired and distracted. This issue is applicable to the EOR especially for critical matters affecting the health and safety of the public. So, for large-volume (i.e., truly big data like gigabytes of output that cannot be realistically checked by humans) work where the checking is in whole or in part prescriptive, checking-by-AI could perform better than humans (certainly faster), which can at least identify areas for people to concentrate on. We are seeing versions of this in other professions, such as medicine for radiology scans, where the application of AI has improved diagnostic accuracy and patient outcomes. In the legal field, there are AI-driven document review services that can perform an initial review of terabyte-scale productions with millions of documents allowing lawyers to focus their review on a more manageable set of documents.

7. When should the use of AI be disclosed to clients?

Well, as we noted above, you’ve already been using various forms of AI in your work with spell and grammar check and embedded analysis and drafting software applications. We expect that those uses have occurred without explicit disclosure as that is not the current standard. There is also the practicality of being able to accurately identify how, where, and to what extent AI is used, especially as the person works with it. So, we currently consider appropriate use of AI to consist of training along with approved guardrails (example below) without the need to add explicit disclosures. The one exception we consider appropriate is the use of AI tools to generate and/or alter audio, images, and video, simply because this has both copyright and credibility consequences. In these cases, an explicit disclosure on the use and to what extent is appropriate.

Sample AI Guardrails

Generative artificial intelligence (Gen AI) presents new opportunities for responsible use in our work. The following topics apply to all of our work, but are provided with specific detail related to our use of Gen AI and similar tools at our firms.

- What we save in time, don’t lose in intellect. Independently test, verify, and validate responses such that they become your work product.

- Comply with confidentiality, privacy, data protection, and export control requirements. Do not prompt with project-specific information.

- Protect the firm’s intellectual property and respect others’ intellectual property. Information entered into Gen AI systems is not private.

- Discuss with team members how and when it is used on your projects. Share what you learn.

- Follow normal quality procedures as documented in our firms’ corporate quality manuals.

So where is this going next? Here are three bonus questions on themes we expect will continue to develop, along with our early thoughts.

8. When will it be unethical or illegal NOT to use AI?

This may seem an odd thought, but it is the current philosophical discussion with self-driving car technology. The logic is that a technology does not have to be perfect but rather, once that technology is demonstrably better at what it does than most humans, then it must be used. Essentially because the outcome is better for life safety and society overall. We understand this is an uncomfortable idea related to giving up (more) control to technology, but there is a logic there that will continue to be part of discussions and it’s best we start thinking about this now.

9. Can AI become, or achieve the designation of, a professional engineer?

Current regulatory structures only allow a human to be a professional engineer. However, we can easily envision a scenario where, as AI accesses all documents and previous designs in a company’s system, it can produce work based only on previous stamped work product. So, what does this mean both for the role of the professional engineer and for the business model of a licensed design professional? We noted above how we will have to adapt our QA approach to employ more of a sanity-check strategy, but how will engineers in the future learn to sanity-check work without doing the work themselves first? And what will it mean to business models when work is generated in far less time (e.g., “DDs in 10 minutes”) or when clients expect all time-saving tools to be used if they are paying for time? We expect business models will change while expecting society will also demand (for now) for there to be a human in charge.

10. How do we know where this is going and how fast?

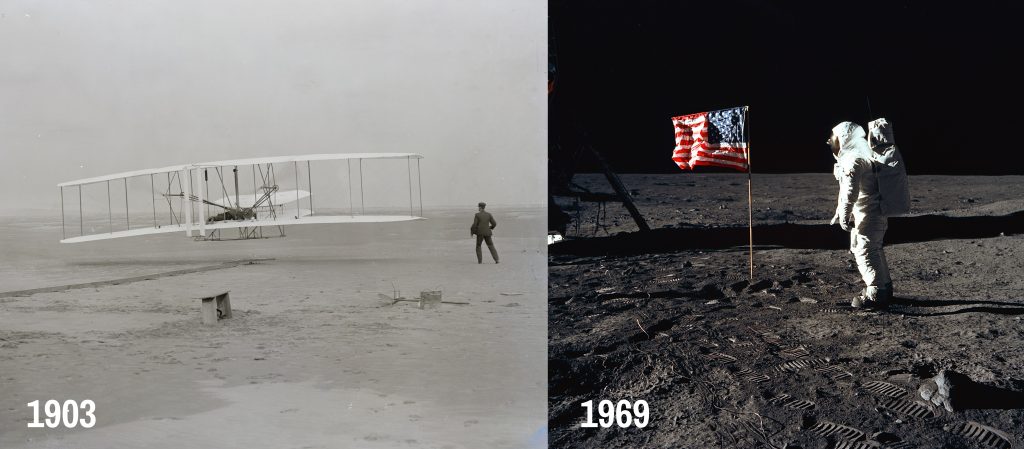

The short, and uncomfortable, answer is we don’t. But we do know time moves faster with each step in technological progress. Below are comparison images showing it took just 66 years to go from Kitty Hawk to the Moon.

Technological leap: it took just 66 years to go from Kitty Hawk to the Moon.

We think this is a more apt image comparison and leap in technology than the typical room-of-drafters compared to an engineer using BIM. Because we think the AEC profession (and, to a great extent, all professions) is just starting its own equivalent taking-flight moment at the start of immense and rapid progress. We don’t know where this is going, but we hope to enjoy the ride!

This article is co-authored by Paul E. Kassabian, P.E., P.Eng. (BC), C.Eng. (UK), of Simpson Gumpertz & Heger and John M. Lim, Esq., of MG+M The Law Firm.

Disclaimer:

The opinions expressed herein are not legal advice and should not be relied upon or construed as legal advice. The opinions expressed and any questions thereof should be reviewed with legal counsel in your jurisdiction; additionally, any questions thereof with regard to governing registration and licensing laws and rules should be reviewed with legal counsel. Further, given the new and evolving nature of AI in design professional practice it reasonably should be expected that standards of practice will be defined by the industry and others as AI utilization evolves further and in more diversified and pervasive manners.

Footnotes:

1 Pursnani, V., Sermet, Y., Kurt, M., & Demir, I. (2023). Performance of ChatGPT on the US Fundamentals of Engineering Exam: Comprehensive Assessment of Proficiency and Potential Implications for Professional Environmental Engineering Practice. Computers and Education: Artificial Intelligence, 5, 100183. https://doi.org/10.1016/j.caeai.2023.100183

2 Examples of AI technologies include the following:

- Generative Al tools that create photorealistic images based on user prompts, such as DALL.E, Stable Diffusion, Midjourney

- AI tools that generate multiple design options based on client specifications, such as Maket.ai

- AI-generated 3D modeling tools, such as Arko.ai

- Al-driven tools for BIM collaboration between architects, engineers, and builders, such as BricsCAD BIM